Positional Encoding

Jul 18, 2024

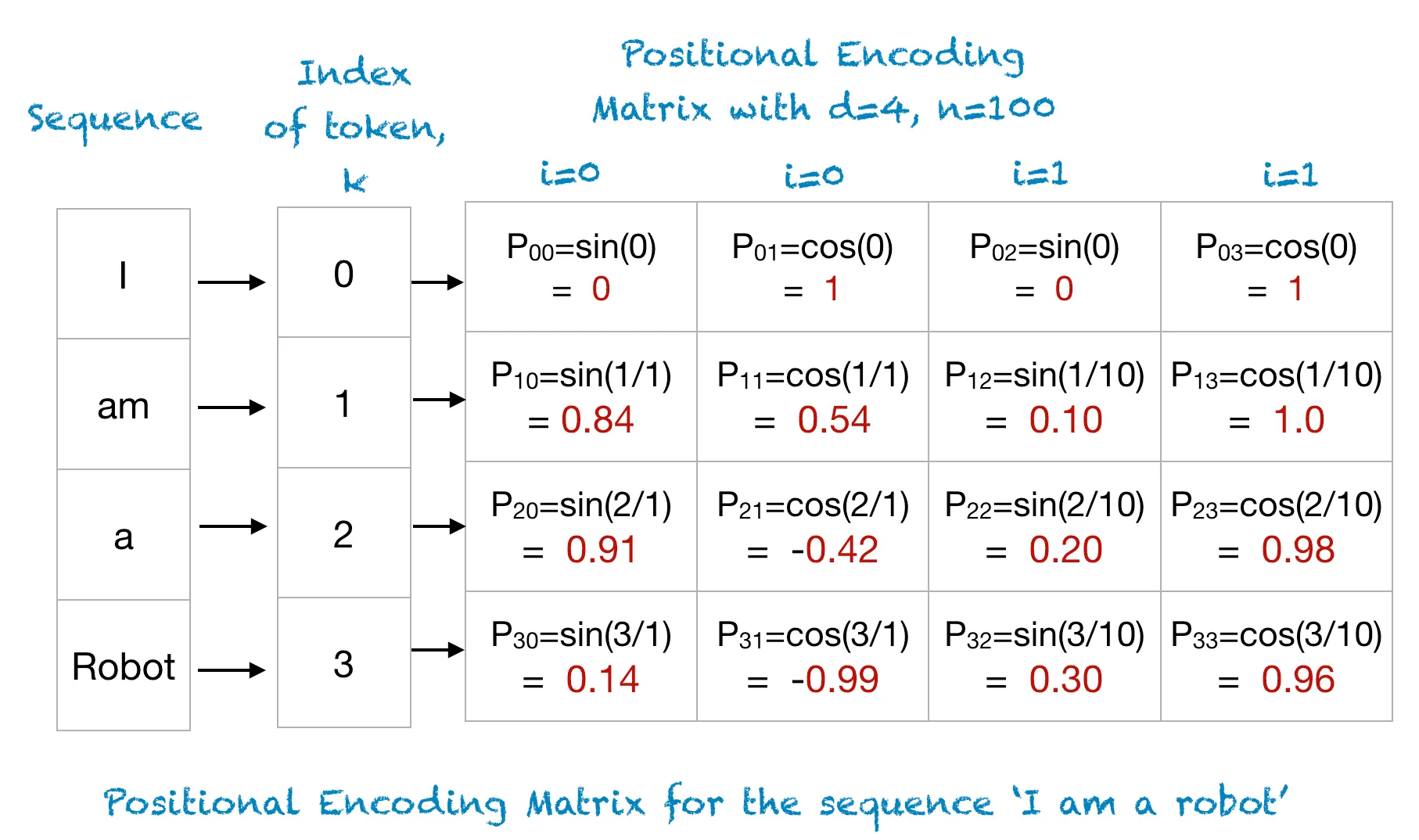

When performing a Vector Embedding of a sentence, you would like retain the positional information of each word when passing your input to your model. This way the model can learn to recognize different uses of the words in the sentence.

The authors of the Attention Is All You Need paper decided to use trigonometric functions to encode the positional information. The and functions have the nice property that the values are constrained between . They also follow a pattern which means that the model can learn accordingly. This is the reason why we don’t use the index of the token itself – it has a value in the range which means that words that appear further in the sentence will have a bigger value. They decided to encode odd vector indexes with and even vector indexes with :

After calculating the positional embedding for the given position, it’s added up together with the corresponding word vector embedding.