Precision and Recall

Jul 18, 2024

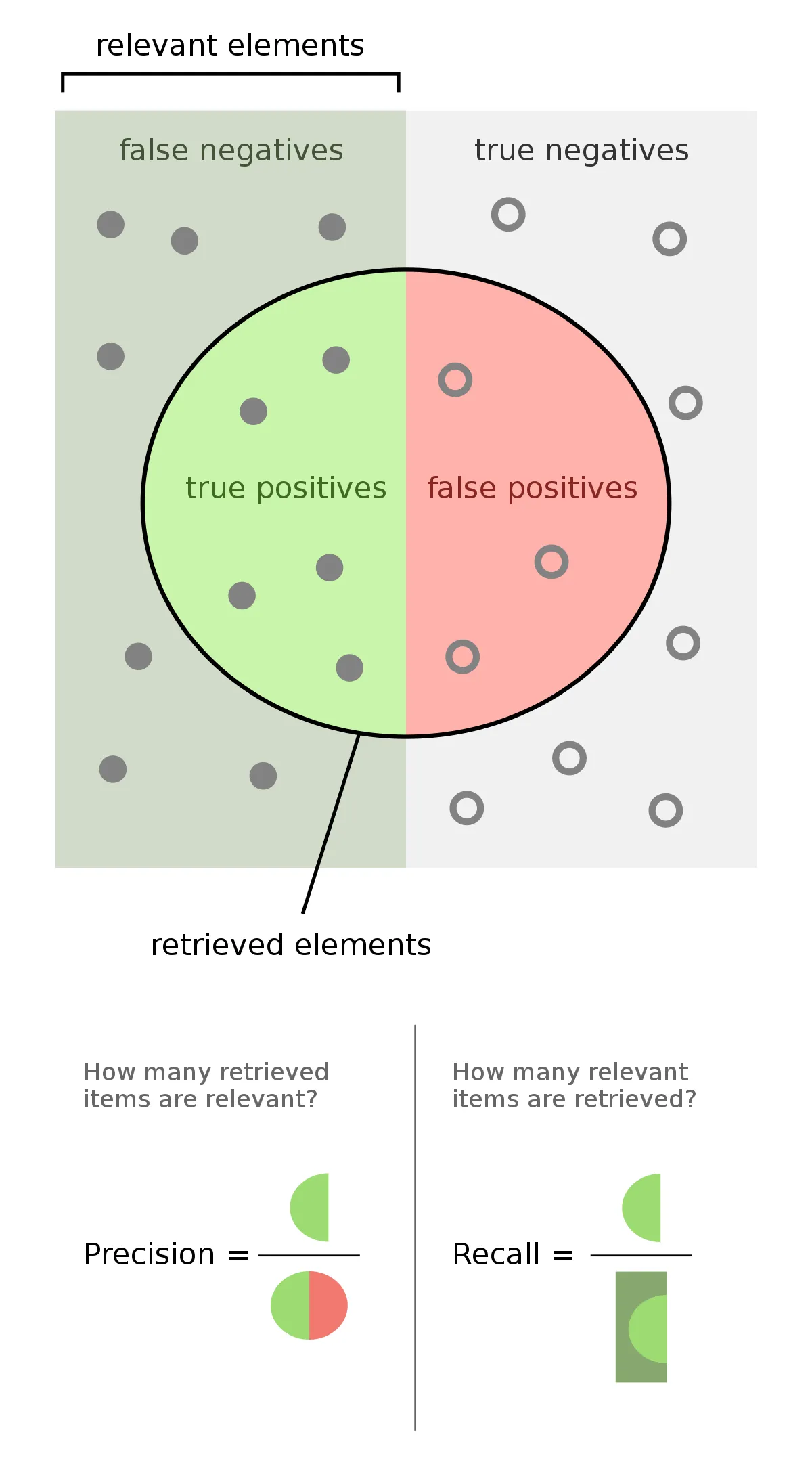

Precision and recall are measurements for determining the accuracy of a prediction model.

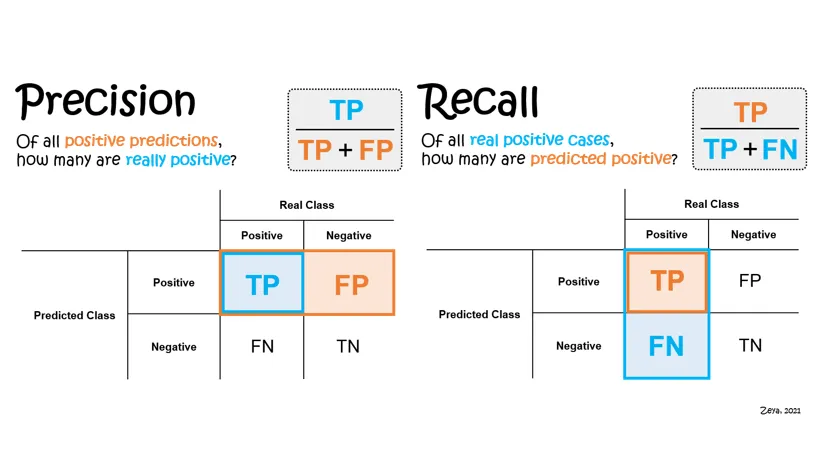

Precision

Precision (also known as sensitivity) measures how many of the predicted positives were actually positive (correct).

For example, let’s say you have a Binary Classification model for determining whether a picture contains a hot dog or not. If your model has 1 TP and 1 FP, you get the precision metric equal to 0.5. This means that if your model predicts that some photo contains a hot dog, it is correct 50% of the time.

If your model has no FPs, it will result in 100% precision. You can easily “cheat” this by making your model always return negatives. This means that your recall will go down.

Recall

Recall measures how many of the actual positives were identified correctly.

Continuing with the precision example, If your model has 2 TP and 3 FN, you get a recall equal to 0.4. This means that of all pictures (e.g. 10, with 5 of them being hot dogs), your model will identify 40% of the hot dogs.

If your model has no FNs, it will result in 100% recall. You can easily “cheat” this by making your model always return positives. This means that your precision will go down.

When to use one over the other

Both can be useful, depending on the context you’re using them in. A simple mnemonic to remember the differences is “PREcision is to PREgnancy tests as reCALL is to CALL center”

In a pregnancy test, you’d want to have high precision, i.e. reducing FPs. You don’t want to give people false-hopes by making them think they are pregnant. People might end up making rash decisions based on the result. FNs on the other hand aren’t as bad, as you’ll find that you’re pregnant later on anyway.

In a call center for insurance claims, you’d want to have high recall, i.e. reducing FNs. If the employees’ job is to mark claims as a scam (to be further investigated) or not a scam (to pay money to the claimant directly), they’d want to play on the safe side and report anything that seems likely to be a fraud. This will result in more FPs (meaning that employees falsely reported claims as scams, when they were actually legitimate), as opposed to letting a scam fall through the cracks (yielding more FNs)

Terminology

TP means True Positive FP means False Positive TN means True Negative FN means False Negative