Forward Propagation

Jul 18, 2024

machine-learning

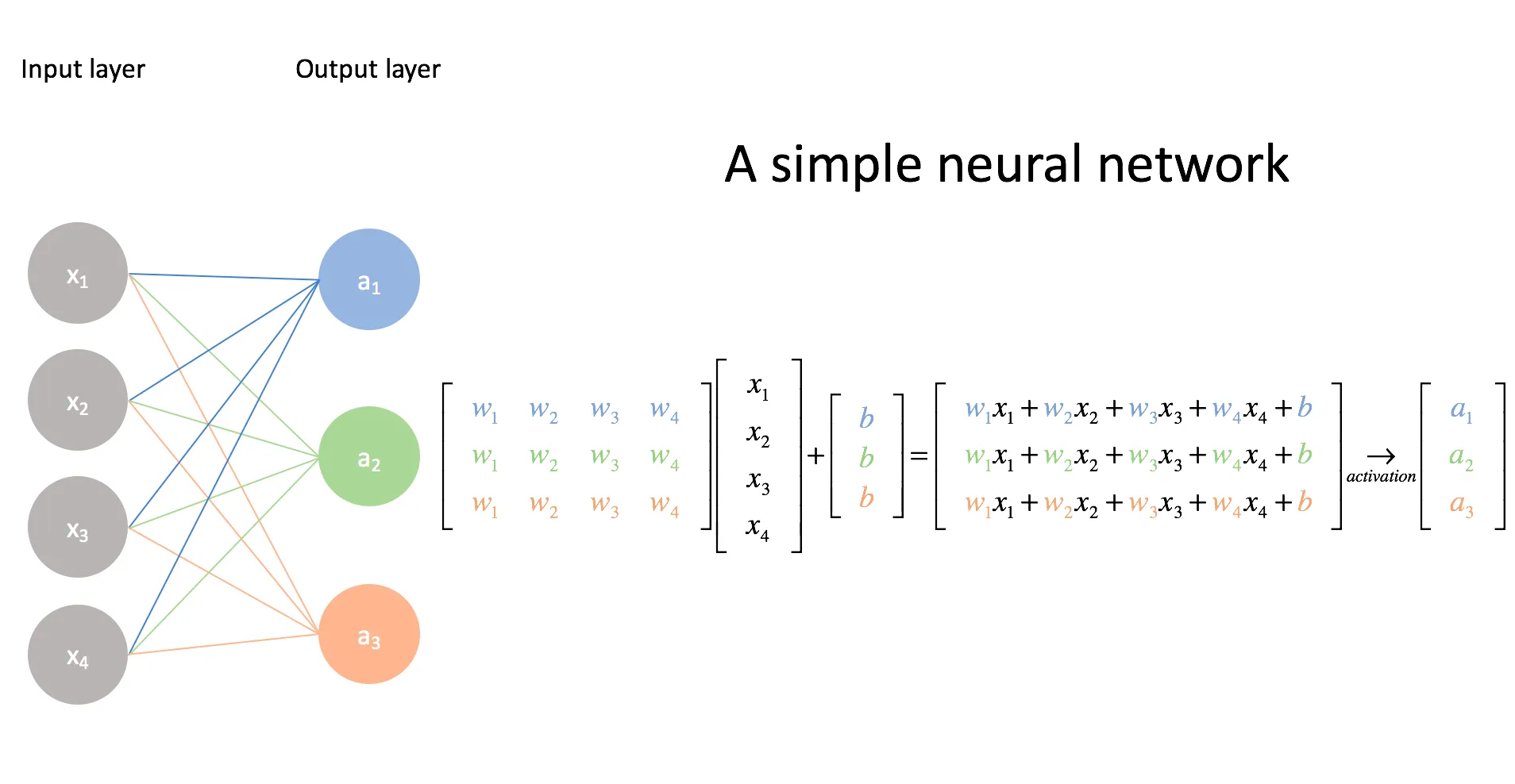

The forward pass in a Neural Network is also known as the “prediction” step. You give the neural network some input and it gives you an output.

How it works

- Passing in the input to the neural network

- The input shape has to match with the first layer of the network

- The layer’s activations are equal to

- In other words, the activation of the current layer’s neuron is equal to the weighted sum of the previous layer’s weights and activations + a bias which are all then passed to an Activation Function.

- The weighted sum is computed by performing matrix multiplication between the weight matrix and the previous layer’s activations.

- The input layer’s “activations” are simply the input values.

- This is repeated for all layers

- In other words, the activation of the current layer’s neuron is equal to the weighted sum of the previous layer’s weights and activations + a bias which are all then passed to an Activation Function.